Learning cognitive maps for vicarious evaluation

Image credit: Unsplash

Image credit: Unsplash

Abstract

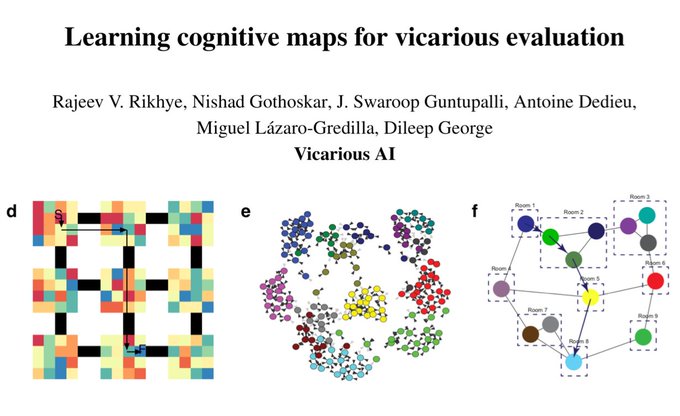

Cognitive maps enable us to learn the layout of environments, encode and retrieve episodic memories, and navigate vicariously for mental evaluation of options. A unifying model of cognitive maps will need to explain how the maps can be learned scalably with sensory observations that are non-unique over multiple spatial locations (aliased), retrieved efficiently in the face of uncertainty, and form the fabric of efficient hierarchical planning. We propose learning higher-order graphs – structured in a specific way that allows efficient learning, hierarchy formation, and inference – as the general principle that connects these different desiderata. We show that these graphs can be learned efficiently from experienced sequences using a cloned Hidden Markov Model (CHMM), and uncertainty-aware planning can be achieved using message-passing inference. Using diverse experimental settings, we show that CHMMs can be used to explain the emergence of context-specific representations, formation of transferable structural knowledge, transitive inference, shortcut finding in novel spaces, remapping of place cells, and hierarchical planning. Structured higher-order graph learning and probabilistic inference might provide a simple unifying framework for understanding hippocampal function, and a pathway for relational abstractions in artificial intelligence.

Supplementary notes can be added here, including code and math.