Space is a latent sequence: Structured sequence learning as a unified theory of representation in the hippocampus

Fascinating and puzzling phenomena, such as landmark vector cells, splitter cells, and event-specific representations to name a few, …

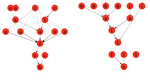

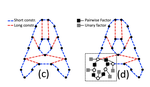

Clone-structured graph representations enable flexible learning and vicarious evaluation of cognitive maps

Cognitive maps are mental representations of spatial and conceptual relationships in an environment, and are critical for flexible …

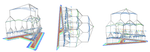

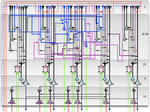

A detailed mathematical theory of thalamic and cortical microcircuits based on inference in a generative vision model

Understanding the information processing roles of cortical circuits is an outstanding problem in neuroscience and artificial …

A Model of Fast Concept Inference with Object-Factorized Cognitive Programs

The ability of humans to quickly identify general concepts from a handful of images has proven difficult to emulate with robots. …

Learning cognitive maps as structured graphs for vicarious evaluation

Cognitive maps enable us to learn the layout of environments, encode and retrieve episodic memories, and navigate vicariously for …

Learning undirected models via query training

Query training is a a technique that lets you train graphical models using ideas from deep learning.

Memorize-Generalize: An online algorithm for learning higher-order sequential structure with cloned Hidden Markov Models

Sequence learning is a vital cognitive function and has been observed in numerous brain areas. Discovering the algorithms underlying …

Different clones for different contexts: Hippocampal cognitive maps as higher-order graphs of a cloned HMM

Hippocampus encodes cognitive maps that support episodic memories, navigation, and planning. Under-standing the commonality among those …

Learning higher-order sequential structure with cloned HMMs

Variable order sequence modeling is an important problem in artificial and natural intelligence. While overcomplete Hidden Markov …

Beyond Imitation: Zero-shot task transfer on robots by learning concepts as cognitive programs

Concepts are formalized as programs on a special computer architectrue called the Visual Cognitive Computer (VCC). By learning programs …

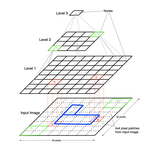

Explaining Visual Cortex Phenomena using Recursive Cortical Network

A hierarchical vision model that emphasizes the role of lateral and feedback connections and treats classification, segmentation …

Cortical micro-circuits from a generative vision model

A hierarchical vision model that emphasizes the role of lateral and feedback connections and treats classification, segmentation …

Behavior is Everything: Towards Representing Concepts with Sensorimotor Contingencies

AI has seen remarkable progress in recent years, due to a switch from hand-designed shallow representations, to learned deep …

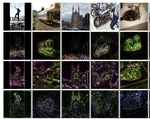

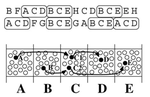

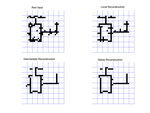

A generative model for vision that trains with high data efficiency and breaks text-based CAPTCHAs

Learning from a few examples and generalizing to markedly different situations are capabilities of human visual intelligence that are …

Schema Networks: Zero-shot transfer with a generative causal model of intuitive physics

The recent adaptation of deep neural network-based methods to reinforcement learning and planning domains has yielded remarkable …

Teaching compositionality to CNNs

Convolutional neural networks (CNNs) have shown great success in computer vision, approaching human-level performance when trained for …

Hierarchical Compositional Feature Learning

We introduce the hierarchical compositional network (HCN), a directed generative model able to discover and disentangle, without …

What can the brain teach us about building artificial intelligence?

This paper is an invited commentary on Lake et al's Behavioral and Brain Sciences article titled “Building machines that learn …

Generative shape models

Learning from a few examples and generalizing to markedly different situations are capabilities of human visual intelligence that are …

Towards a Mathematical Theory of Cortical Micro-circuits

The theoretical setting of hierarchical Bayesian inference is gaining acceptance as a framework for understanding cortical computation. …

Sequence memory for prediction, inference and behaviour

In this paper, we propose a mechanism which the neocortex may use to store sequences of patterns. Storing and recalling sequences are …

A Hierarchical Bayesian Model of Invariant Pattern Recognition in the Visual Cortex

We describe a hierarchical model of invariant visual pattern recognition in the visual cortex. In this model, the knowledge of how …

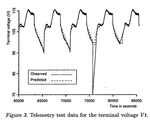

Robust induction of process models from time-series data

In this paper, we revisit the problem of in- ducing a process modelfrom time-series data. Weillustrate this task with a realistic …