Learning undirected models via query training

Abstract

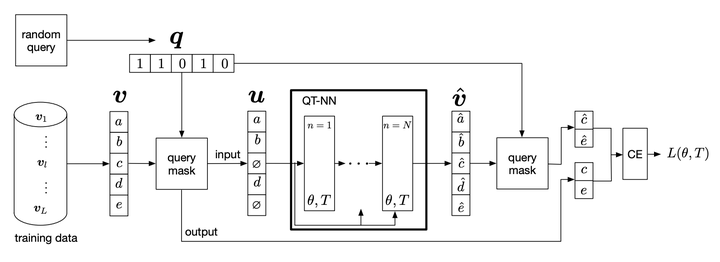

Typical amortized inference in variational autoencoders is specialized for a single probabilistic query. Here we propose an inference network architecture that generalizes to unseen probabilistic queries. Instead of an encoder-decoder pair, we can train a single inference network directly from data, using a cost function that is stochastic not only over samples, but also over queries. We can use this network to perform the same inference tasks as we would in an undirected graphical model with hidden variables, without having to deal with the intractable partition function. The results can be mapped to the learning of an actual undirected model, which is a notoriously hard problem. Our network also marginalizes nuisance variables as required. We show that our approach generalizes to unseen probabilistic queries on also unseen test data, providing fast and flexible inference. Experiments show that this approach outperforms or matches PCD and AdVIL on 9 benchmark datasets.