Memorize-Generalize: An online algorithm for learning higher-order sequential structure with cloned Hidden Markov Models

Abstract

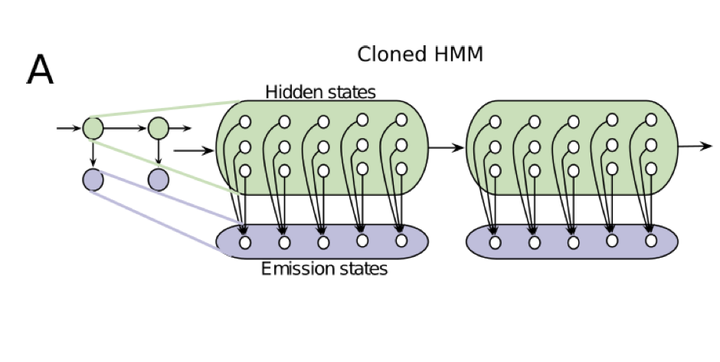

Sequence learning is a vital cognitive function and has been observed in numerous brain areas. Discovering the algorithms underlying sequence learning has been a major endeavour in both neuroscience and machine learning. In earlier work we showed that by constraining the sparsity of the emission matrix of a Hidden Markov Model (HMM) in a biologically-plausible manner we are able to efficiently learn higher-order temporal dependencies and recognize contexts in noisy signals. The central basis of our model, referred to as the Cloned HMM (CHMM), is the observation that cortical neurons sharing the same receptive field properties can learn to represent unique incidences of bottom-up information within different temporal contexts. CHMMs can efficiently learn higher-order temporal dependencies, recognize long-range contexts and, unlike recurrent neural networks, are able to natively handle uncertainty. In this paper we introduce a biologically plausible CHMM learning algorithm, memorize-generalize, that can rapidly memorize sequences as they are encountered, and gradually generalize as more data is accumulated. We demonstrate that CHMMs trained with the memorize-generalize algorithm can model long-range structure in bird songs with only a slight degradation in performance compared to expectation-maximization, while still outperforming other representations.