Learning higher-order sequential structure with cloned HMMs

Abstract

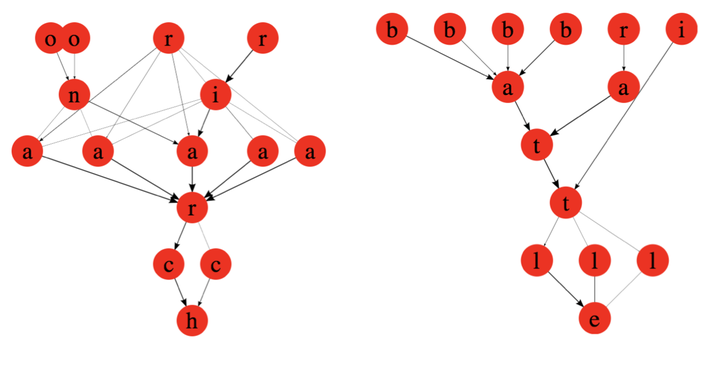

Variable order sequence modeling is an important problem in artificial and natural intelligence. While overcomplete Hidden Markov Models (HMMs), in theory, have the capacity to represent long-term tem- poral structure, they often fail to learn and converge to local minima. We show that by constraining HMMs with a simple sparsity structure inspired by biology, we can make it learn variable order sequences efficiently. We call this model cloned HMM (CHMM) because the sparsity structure enforces that many hidden states map deterministically to the same emission state. CHMMs with over 1 billion parameters can be efficiently trained on GPUs without being severely affected by the credit diffusion problem of standard HMMs. Unlike n-grams and sequence memoizers, CHMMs can model temporal dependencies at arbitrarily long distances and recognize contexts with “holes” in them. Compared to Recurrent Neural Networks and their Long Short-Term Memory extensions (LSTMs), CHMMs are generative models that can natively deal with uncertainty. Moreover, CHMMs return a higher-order graph that represents the temporal structure of the data which can be useful for community detection, and for building hierarchical models. Our experiments show that CHMMs can beat n-grams, sequence memoizers, and LSTMs on character-level language modeling tasks. CHMMs can be a viable alternative to these methods in some tasks that require variable order sequence modeling and the handling of uncertainty.